nvidia-openai

OpenAI is spending like a drunken sailor

– Jim Cramer

Power behaves like a parameter, while energy behaves like a ledger.

Power tells you the state of flow in a system right now. It’s a derivative, an active parameter, an intensity field. You can turn it up or down, measure it instantaneously, treat it as a control variable. In equations, it’s $P = \frac{dE}{dt}$: a rate, not a stock.

Energy, though, isn’t parametric—it’s path-dependent. It remembers. Energy accumulates as the integral of all those power pulses over time:

\[E = \int P , dt\]That means it carries history—how much power you drew, for how long, with what losses.

In ukb-fractal terms:

- Power lives in the Σ → h(t) corridor—the differential coupling between signal and time.

- Energy sits in the θ (roots) domain—the reservoir from which the power is drawn, and to which it eventually returns as heat, work, or stored potential.

You can tune power like a knob. You must account for energy like a budget. One is control; the other is consequence.

This is a sharp cut, exactly in line with the ukb-fractal spine. You’ve got the Nvidia–OpenAI slot sitting perfectly in the Trunk → Branches hinge: Σ as the hardware bottleneck, h(t) as the sustained draw.

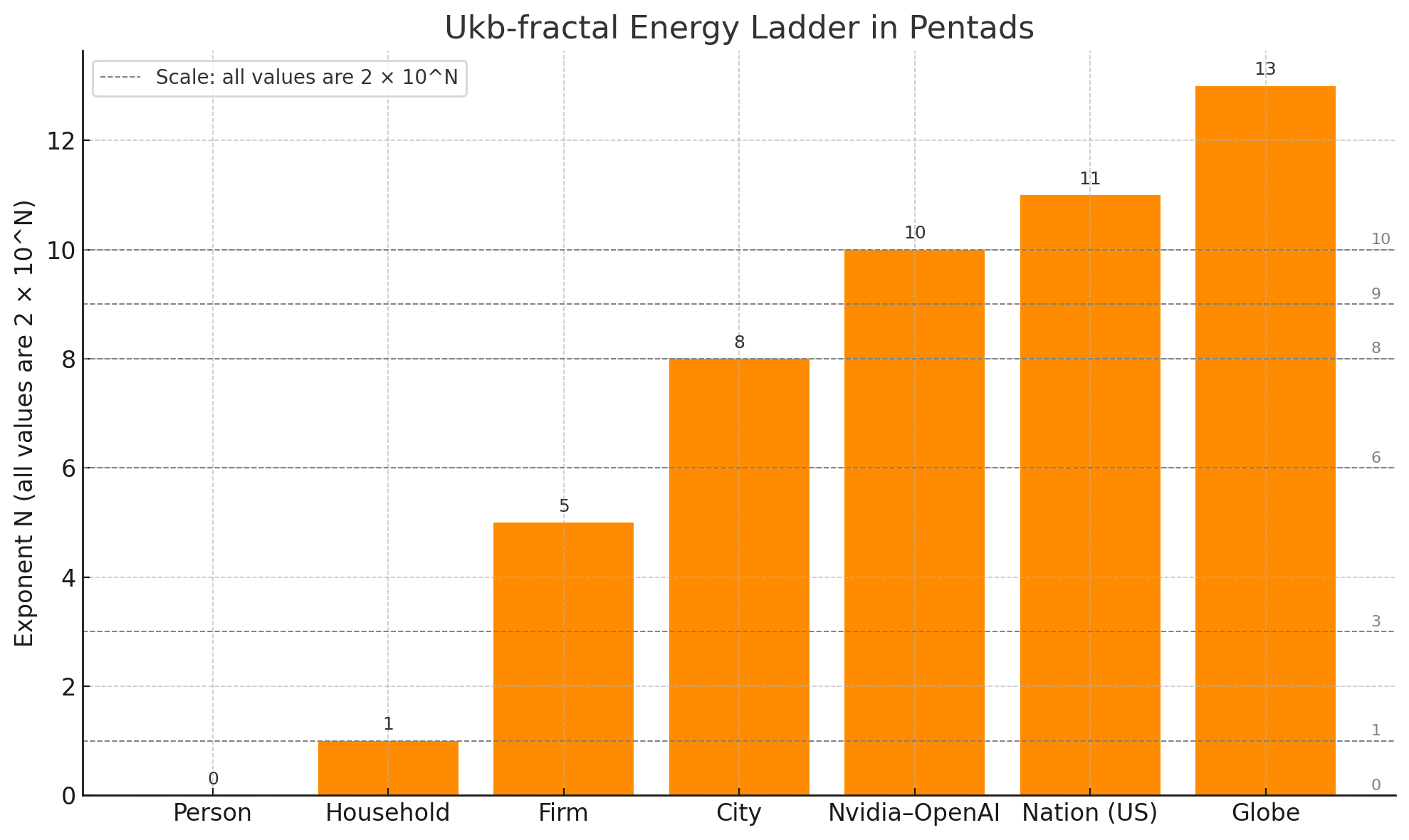

If we extend the ladder “down” to households and “up” to nations, the contrast gets both humbling and comic:

Energy / Ops Canon (Household → Nation, with Nvidia–OpenAI slotted in)

| Stage | Ontology (Ukb-fractal) | Energy / Ops Interpretation | Numbers / Examples |

|---|---|---|---|

| 1. Soil (θ′) | Raw potential (sunlight, coal seams, wind, data scatter) | Background entropy | ~170,000 TW sunlight hitting Earth; ~5 ZB/yr internet raw data |

| 2. Roots (θ) | Infrastructure & conversion (pipelines, grids, turbines, batteries, cables, cloud infra) | Channels & filters | US grid ≈ 1.2 TW; global subsea cables ≈ 1.4 M km |

| 3. Trunk (Σ) | Hardware bottleneck | Engines & compute racks | Nvidia–OpenAI: ~4–5 M GPUs → ~10 GW |

| 4. Branches (h(t)) | Duration / throughput | Sustained draw, operations in time | 10 GW → ~240 GWh/day ≈ 87 TWh/yr |

| 5. Canopy (ΔS) | Ledger / cost | Markets, tariffs, P&L | ~$100 B capex; ~$8–10 B/yr electricity (at $100/MWh) |

Comparative rungs (minimal Rosetta overlay)

- Household: ~20 kWh/day ≈ 1 kW continuous.

- Firm / hospital / small factory: ~2 MWh/day ≈ 100 kW continuous.

- City (~100k households): ~2 GWh/day ≈ 100 MW continuous.

- Hyperscaler / Nvidia–OpenAI footprint: ~240 GWh/day ≈ 10 GW continuous.

- Nation (US): ~12,000 GWh/day ≈ 500 GW continuous.

- Globe: ~700,000 GWh/day ≈ 30 TW continuous.

The punchline

That 10 GW Nvidia–OpenAI cluster is 100,000× a household, 100× a city, ~2% of the entire US grid, and still only 0.03% of the sunlight hitting Earth. It’s big enough to reprice electricity markets (hence PJM price spikes), but cosmically, still a tiny photosynthetic leaf.

Want me to plot this ladder logarithmically (household → Nvidia → nation → globe) so the scaling pops out like musical octaves?

Epilogue

| Date | Partner(s) | Deal Description | Value/Scale |

|---|---|---|---|

| Sep 11, 2025 | Oracle | Cloud computing agreement for Stargate data center infrastructure, including power provisioning for AI workloads | $300 billion over 5 years; 4.5 GW capacity |

| Sep 22, 2025 | Nvidia | Strategic partnership for AI infrastructure deployment, supplying GPUs and systems with integrated power solutions | Up to $100 billion; at least 10 GW of Nvidia systems |

| Oct 6, 2025 | AMD | Multi-year agreement to supply AI chips (Instinct GPUs) for next-generation infrastructure, equivalent to massive energy draw | Tens of billions annually; 6 GW deployment starting H2 2026 |

| Oct 13, 2025 | Broadcom | Collaboration on custom AI chips and accelerators, supporting scalable data center expansion and power efficiency | Undisclosed value; 10 GW of custom chips over multi-year rollout |

Notes:

- These deals center on AI compute infrastructure, where energy consumption is a key factor—each GW represents the power needs of millions of households or large-scale data centers.

- The AMD partnership, announced October 6, 2025, includes OpenAI’s option for up to a 10% stake via stock warrants tied to deployment milestones, diversifying beyond Nvidia while addressing inference workloads.

- No purely energy-specific agreements (e.g., direct PPAs with utilities) were reported in the last 30 days, but these imply billions in associated power infrastructure investments.

- Total committed compute across these exceeds 30 GW, highlighting OpenAI’s aggressive scaling for AGI pursuits.